Note: This is an experimental planet, if you have a feed you would like to add or you would like your feed to be removed please contact me.

Planet 9

October 11, 2012

October 07, 2012

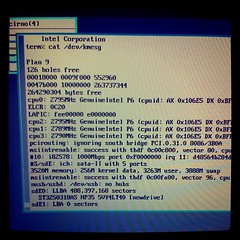

NineTimes - Inferno and Plan 9 News

9FRONT "MOTHY MARTHA" Released

New 9front release "MOTHY MARTHA"

the changes from previous release include:

mothra improvements: fast scroll, text selection

updated manual pages

hjfs improvments and bugfixes

added "Rules of Acquisition" (/lib/roa)

devproc bugfixes

improved guesscpuhz()

fixed tftp lost ack packet bug

added eriks atazz and disk/smart

sata support for 4K sector drives

October 04, 2012

September 23, 2012

Command Center

Thank you Apple

Some days, things just don't work out. Or don't work.

Earlier

I wanted to upgrade (their term, not mine) my iMac from Snow Leopard (10.6) to Lion (10.7). I even had the little USB stick version of the installer, to make it easy. But after spending some time attempting the installation, the Lion installer "app" failed, complaining about SMART errors on the disk.Disk Utility indeed reported there were SMART errors, and that the disk hardware needed to be replaced. An ugly start.

The good news is that in some places, including where I live, Apple will do a house call for service, so I didn't have to haul the computer to an Apple store on public transit.

Thank you Apple.

I called them, scheduled the service for a few days later, and as instructed by Apple (I hardly needed prompting) prepped a backup using Time Machine.

The day before the repairman was to come to give me a new disk, I made sure the system was fully backed up, for security reasons started a complete erasure of the bad disk (using Disk Utility in target mode from another machine, about which more later), and went to bed.

The day

When I got up, I checked that the disk had been erased and headed off to work. As I left the apartment, the ceiling lights in the entryway flickered and then went out: a new bulb was needed. On the way out of the building, I asked the doorman for a replacement bulb. He offered just to replace it for us. We have a good doorman.Once at work, things were normal until my cell phone rang about 2pm. It was the Apple repairman, Twinkletoes (some names and details have been changed), calling to tell me he'd be at my place within the hour. Actually, he wasn't an Apple employee, but a contractor working for Unisys, a name I hadn't heard in a long time. (Twinkletoes was a name I hadn't heard for a while either, but that's another story.) At least here, Apple uses Unisys contractors to do their house calls.

So I headed home, arriving before Twinkletoes. At the front door, the doorman stopped me. He reported that the problem with the lights was not the bulb, but the wiring. He'd called in an electrician, who had found a problem in the breaker box and fixed it. Everything was good now.

When I got up to the apartment, I found chaos: the cleaners were mid-job, with carpets rolled up, vacuum cleaners running, and general craziness. Not conducive to work. So I went back down to the lobby with my laptop and sat on the couch, surfing on the free WiFi from the café next door, and waited for Twinkletoes.

Half an hour later, he arrived and we returned to the apartment. The cleaners were still there but the chaos level had dropped and it wasn't too hard to work around them. I saw what the inside of an iMac looks like as Twinkletoes swapped out the drive. By the time he was done, the cleaners had left and things had settled down.

I had assumed that the replacement drive would come with an installed operating system, but I assumed wrong. (When you assume, you put plum paste on your ass.) I had a Snow Leopard installation DVD, but I was worried: it had failed to work for me a few days earlier when I wanted to boot from it to run fsck on the broken drive. Twinkletoes noticed it had a scratch. I needed another way to boot the machine.

It had surprised me when Lion came out that the installation was done by an "app", not as a bootable image. This is an unnecessary complication for those of us that need to maintain machines. Earlier, when updating a different machine, I had learned how painful this could be when the installation app destroyed the boot sector and I needed to reinstall Snow Leopard from DVD, and then upgrade that to a version of the system recent enough to run the Lion installer app. As will become apparent, had Lion come as a bootable image things might have gone more smoothly.

Thank you Apple.

[Note added in post: Several people have told me there's a bootable image inside the installer. I forgot to mention that I knew that, and there wasn't. For some reason, the version on the USB stick I have looks different from the downloaded one I checked out a day or two later, and even Twinkletoes couldn't figure out how to unpack it. Weird.]

Twinkletoes had an OS image he was willing to let me copy, but I needed to make a bootable drive from it. I had no sufficiently large USB stick—you need a 4GB one you can wipe. However I did have a free, big enough CompactFlash card and a USB reader, so that should do, right? Twinkletoes was unsure but believed it would.

Using my laptop, I used Disk Utility to create a bootable image on the CF card from Twinkletoes's disk image. We were ready.

Plug in the machine, push down the Option key, power on.

Nothing.

Turn on the light.

Nothing.

No power.

The cleaners must have tripped a breaker.

I went to the breaker box and found that all the breakers looked OK. We now had a mystery, because the cleaners had had lights on and were using electric appliances—I saw a vacuum cleaner running—but now there was no power. Was the power off to the building? No: the lights still worked in the kitchen and the oven clock was lit. I called the doorman and asked him to get the electrician back as soon as possible and then, with a little portable lamp, went looking around the apartment for a working socket. I found one, again in the kitchen. The iMac was going to travel after all, if not as far as downtown.

The machine was moved, plugged in, option-key-downed, and powered on. I selected the CF card to boot from, waited 15 minutes for the installation to come up, only to have the boot fail. CF cards don't work after all, although the diagnosis of failure is a bit tardy and uninformative.

Thank you Apple.

Next idea. My old laptop has FireWire so we could bring the disk up using target mode and then run the installer on the laptop to install Lion on the iMac.

We did the target mode dance and connected to the newly installed drive, then ran Disk Utility on the laptop to format the drive. Things were starting to look better.

Next, we put the Lion installer stick into the laptop, which was running a recent version of Snow Leopard.

Failure again. This time the problem is that the laptop, all of about four years old, is too old to run Lion. It's got a Core Duo, not a Core 2 Duo, and Lion won't run on that hardware. Even though Lion doesn't need to run, only the Lion installer needs to run, the system refuses to help. My other laptop is new enough to run the installer, but it doesn't have FireWire so it can't do target mode.

Thank you Apple. Your aggressive push to retire old technology hurts sometimes, you know? Actually, more than sometimes, but let's stay on topic.

Twinkletoes has to leave—he's been on the job for several hours now—but graciously lends me a USB boot drive he has, asking me to return it by post when I'm done. I thank him profusely and send him away before he is drawn in any deeper.

Using his boot drive, I was able to bring up the iMac and use the Lion installer stick to get the system to a clean install state. Finally, a computer, although of course all my personal data is over on the backup.

When a new OS X installation comes up, it presents the option of "migrating" data from an existing system, including from a Time Machine backup. So I went for that option and connected the external drive with the Time Machine backup on it.

The Migration Assistant presented a list of disks to migrate from. A list of one: the main drive in the machine. It didn't give me the option of using the Time Machine backup.

Thank you Apple. You told me to save my machine this way but then I can't use this backup to recover.

I called Apple on my cell phone (there's still no power in the room with the land line's wireless base station) and explained the situation. The sympathetic but ultimately unhelpful person on the phone said it should work (of course!) and that I should run Software Update and get everything up to the latest version. He reported that there were problems with the Migration Assistant in early versions of the Lion OS, and my copy of the installer was pretty early.

I started the upgrade process, which would take a couple of hours, and took my laptop back down to the lobby for some free WiFi to kill time. But it's now evening, the café is closed, and there is no WiFi. Naturally.

Back to the apartment, grab a book, return to the lobby to wait for the electrician.

An hour or so later, the electrician arrived and we returned to the apartment to see what was wrong. It was easy to diagnose. He had made a mistake in the fix, in fact a mistake related to what was causing the original problem. The breaker box has a silly design that makes it too easy to break a connection when working in the box, and that's what had happened. So it was easy to fix and easy to verify that it was fixed, but also easy to understand why it had happened. No excuses, but problem solved and power was now restored.

The computer was still upgrading but nearly done, so a few minutes later I got to try migrating again. Same result, naturally, and another call to Apple and this time little more than an apology. The unsatisfactory solution: do a clean installation and manually restore what's important from the Time Machine backup.

Thank you Apple.

It was fairly straightforward, if slow, to restore my personal files from the home directory on the backup, but the situation for installed software was dire. Restoring an installed program, either using the ludicrous Time Machine UI or copying the files by hand, is insufficient in most cases to bring back the program because you also need manifests and keys and receipts and whatnot. As a result, things such as iWork (Keynote etc.) and Aperture wouldn't run. I could copy every piece of data I could find but the apps refused to let me run them. Despite many attempts digging far too deep into the system, I could not get the right pieces back from the Time Machine backup. Worse, the failure modes were appalling: crashes, strange display states, inexplicable non-workiness. A frustating mess, but structured perfectly to belong on this day.

For peculiar reasons I didn't have the installation disks for everything handy, so these (expensive!) programs were just gone, even though I had backed up everything as instructed.

Thank you Apple.

I did have some installation disks, so for instance I was able to restore Lightroom and Photoshop, but then of course I needed to wait for huge updates to download even though the data needed was already sitting on the backup drive.

Back on the phone for the other stuff. Because I could prove that I had paid for the software, Apple agreed to send me fresh installation disks for everything of theirs but Aperture, but that would take time. In fact, it took almost a month for the iWork DVD to arrive, which is unacceptably long. I even needed to call twice to remind them before the disks were shipped.

The Aperture story was more complicated. After a marathon debugging session I managed to get it to start but then it needed the install key to let me do anything. I didn't have the disk, so I didn't know the key. Now, Aperture is from part of the company called Pro Tools or something like that, and they have a different way of working. I needed to contact them separately to get Aperture back. It's important to understand I hadn't lost my digital images. They were backed up multiple times, including in the network, on the Time Machine backup, and also on an external drive using the separate "vault" mechanism that is one of the best features of Aperture.

I reached the Aperture people on the phone and after a condensed version of the story convinced them I needed an install key (serial number) to run the version of Aperture I'd copied from the Time Machine backup. I was berated by the person on the phone: Time Machine is not suitable for backing up Aperture databases. (What? Your own company's backup solution doesn't know how to back up? Thank you Apple.) After a couple more rounds of abuse, I convinced the person on the phone that a) I was backing up my database as I should, using an Aperture vault and b) it wasn't the database that was the problem, but the program. I was again told that wasn't a suitable way to back up (again, What?), at which point I surrendered and just begged for an installation key, which was provided, and I could again run Aperture. This was the only time in the story where the people I was interacting with were not at least sympathetic to my situation. I guess Pro is a synonym for unfriendly.

Thank you Apple.

There's much more to the story. It took weeks to get everything working again properly. The complete failure of Time Machine to back up my computer's state properly was shocking to me. After this fiasco, I learned about the Lion Recovery App, which everyone who uses Macs should know about, but was not introduced until well after Lion rolled out with its preposterous not-bootable installation setup. The amount of data I already had on my backup disk but that needed to be copied from the net again was laughable. And there were total mysteries, like GMail hanging forever for the first day or so, a problem that may be unrelated or may just be the way life was this day.

But, well after midnight, worn out, beat up, tired, but with electricity restored and a machine that had a little life in it again, I powered down, took the machine back to my office and started to get ready for bed. Rest was needed and I had had enough of technology for one day.

One more thing

Oh yes, one more thing. There's always one more thing in our technological world.I walked into the bathroom for my evening ablutions only to have the toilet seat come off completely in my hand.

Just because you started it all, even for this,

Thank you Apple.

September 22, 2012

September 18, 2012

NineTimes - Inferno and Plan 9 News

9FRONT "OFF KEY LEE" Released

New 9front release "OFF KEY LEE"

changes include:

ACPI improvement (AliasOp AML instruction)

USB unassigned PciINTL work arround

Intel SCH busmastering dma support

mothra improvements (<script> tag, line break layout bugfix)

HTML support for lp

rio one line increment scroll (Shift)

September 17, 2012

research!rsc

A Tour of Acme

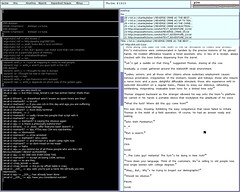

People I work with recognize my computer easily: it's the one with nothing but yellow windows and blue bars on the screen. That's the text editor acme, written by Rob Pike for Plan 9 in the early 1990s. Acme focuses entirely on the idea of text as user interface. It's difficult to explain acme without seeing it, though, so I've put together a screencast explaining the basics of acme and showing a brief programming session. Remember as you watch the video that the 854x480 screen is quite cramped. Usually you'd run acme on a larger screen: even my MacBook Air has almost four times as much screen real estate.

The video doesn't show everything acme can do, nor does it show all the ways you can use it. Even small idioms like where you type text to be loaded or executed vary from user to user. To learn more about acme, read Rob Pike's paper “Acme: A User Interface for Programmers” and then try it.

Acme runs on most operating systems. If you use Plan 9 from Bell Labs, you already have it. If you use FreeBSD, Linux, OS X, or most other Unix clones, you can get it as part of Plan 9 from User Space. If you use Windows, I suggest trying acme as packaged in acme stand alone complex, which is based on the Inferno programming environment.

Mini-FAQ:

- Q. Can I use scalable fonts? A. On the Mac, yes. If you run

acme -f /mnt/font/Monaco/16a/fontyou get 16-point anti-aliased Monaco as your font, served via fontsrv. If you'd like to add X11 support to fontsrv, I'd be happy to apply the patch. - Q. Do I need X11 to build on the Mac? A. No. The build will complain that it cannot build ‘snarfer’ but it should complete otherwise. You probably don't need snarfer.

If you're interested in history, the predecessor to acme was called help. Rob Pike's paper “A Minimalist Global User Interface” describes it. See also “The Text Editor sam”

Correction: the smiley program in the video was written by Ken Thompson. I got it from Dennis Ritchie, the more meticulous archivist of the pair.

September 07, 2012

September 06, 2012

NineTimes - Inferno and Plan 9 News

9FRONT "ROBBY RUBBISH" Released

New 9front "Robby Rubbish"

Changes include support for Intel 82567V ethernet and USB fixes for isochronous transfers (usb audio) and support for SK-8835 IBM Thinkpad USB Keyboard/Mice. date(1) got support to print ISO-8601 date and time and devshr now honors the noattach flag (RFNOMNT).

August 30, 2012

NineTimes - Inferno and Plan 9 News

9FRONT "RELEASE TARGET GERONIMO" Released

New 9front "Release Target Geronimo"

changes include:

aux/cpuid program to print Intel cpu features

netaudit program which checks network database for common mistakes

bugfixes for ndb/dns

fixed old RFNOMNT kernel bug

fix sleep / wakeup bug in devmnt and sdvirtio

pause function in games/gb

rio listing burried windows in button 3 menu

audiohda support for Intel SCH Pouslbo and Oaktrail

usb/kb support for Microsoft Sidewinder X5 Mouse

default configuration for 9bootpxe

tls support for sha256WithRSAEncryption (torproject.com)

bugfixes in jpg

August 12, 2012

NineTimes - Inferno and Plan 9 News

9FRONT "GROOGY GREG" Released

New 9front release "Groovy Greg"

changes include:

- new experimental hjfs filesystem (also avialable as install option)

- native graphics support fixed for vmware and qemu

- ps -n option to print note group

- ppp noauth option

- various bug fixes (icmp frag handling, pci *nobios, audioac97, floppy driver, zipfs, eenter())

August 03, 2012

August 01, 2012

NineTimes - Inferno and Plan 9 News

9FRONT "DEAF GEOFF" Released

New 9front release "Deaf Geoff"

this release consists mainly of bugfixes (cwfs, kfs, factotum, mothra) tho cdfs got updated from sources and sam undo command will jump to the change now (thanks aiju!) ;)

July 04, 2012

Command Center

Less is exponentially more

Here is the text of the talk I gave at the Go SF meeting in June, 2012.

This is a personal talk. I do not speak for anyone else on the Go team here, although I want to acknowledge right up front that the team is what made and continues to make Go happen. I'd also like to thank the Go SF organizers for giving me the opportunity to talk to you.

I was asked a few weeks ago, "What was the biggest surprise you encountered rolling out Go?" I knew the answer instantly: Although we expected C++ programmers to see Go as an alternative, instead most Go programmers come from languages like Python and Ruby. Very few come from C++.

We—Ken, Robert and myself—were C++ programmers when we designed a new language to solve the problems that we thought needed to be solved for the kind of software we wrote. It seems almost paradoxical that other C++ programmers don't seem to care.

I'd like to talk today about what prompted us to create Go, and why the result should not have surprised us like this. I promise this will be more about Go than about C++, and that if you don't know C++ you'll be able to follow along.

The answer can be summarized like this: Do you think less is more, or less is less?

Here is a metaphor, in the form of a true story. Bell Labs centers were originally assigned three-letter numbers: 111 for Physics Research, 127 for Computing Sciences Research, and so on. In the early 1980s a memo came around announcing that as our understanding of research had grown, it had become necessary to add another digit so we could better characterize our work. So our center became 1127. Ron Hardin joked, half-seriously, that if we really understood our world better, we could drop a digit and go down from 127 to just 27. Of course management didn't get the joke, nor were they expected to, but I think there's wisdom in it. Less can be more. The better you understand, the pithier you can be.

Keep that idea in mind.

Back around September 2007, I was doing some minor but central work on an enormous Google C++ program, one you've all interacted with, and my compilations were taking about 45 minutes on our huge distributed compile cluster. An announcement came around that there was going to be a talk presented by a couple of Google employees serving on the C++ standards committee. They were going to tell us what was coming in C++0x, as it was called at the time. (It's now known as C++11).

In the span of an hour at that talk we heard about something like 35 new features that were being planned. In fact there were many more, but only 35 were described in the talk. Some of the features were minor, of course, but the ones in the talk were at least significant enough to call out. Some were very subtle and hard to understand, like rvalue references, while others are especially C++-like, such as variadic templates, and some others are just crazy, like user-defined literals.

At this point I asked myself a question: Did the C++ committee really believe that was wrong with C++ was that it didn't have enough features? Surely, in a variant of Ron Hardin's joke, it would be a greater achievement to simplify the language rather than to add to it. Of course, that's ridiculous, but keep the idea in mind.

Just a few months before that C++ talk I had given a talk myself, which you can see on YouTube, about a toy concurrent language I had built way back in the 1980s. That language was called Newsqueak and of course it is a precursor to Go.

I gave that talk because there were ideas in Newsqueak that I missed in my work at Google and I had been thinking about them again. I was convinced they would make it easier to write server code and Google could really benefit from that.

I actually tried and failed to find a way to bring the ideas to C++. It was too difficult to couple the concurrent operations with C++'s control structures, and in turn that made it too hard to see the real advantages. Plus C++ just made it all seem too cumbersome, although I admit I was never truly facile in the language. So I abandoned the idea.

But the C++0x talk got me thinking again. One thing that really bothered me—and I think Ken and Robert as well—was the new C++ memory model with atomic types. It just felt wrong to put such a microscopically-defined set of details into an already over-burdened type system. It also seemed short-sighted, since it's likely that hardware will change significantly in the next decade and it would be unwise to couple the language too tightly to today's hardware.

We returned to our offices after the talk. I started another compilation, turned my chair around to face Robert, and started asking pointed questions. Before the compilation was done, we'd roped Ken in and had decided to do something. We did not want to be writing in C++ forever, and we—me especially—wanted to have concurrency at my fingertips when writing Google code. We also wanted to address the problem of "programming in the large" head on, about which more later.

We wrote on the white board a bunch of stuff that we wanted, desiderata if you will. We thought big, ignoring detailed syntax and semantics and focusing on the big picture.

I still have a fascinating mail thread from that week. Here are a couple of excerpts:

Robert: Starting point: C, fix some obvious flaws, remove crud, add a few missing features.

Rob: name: 'go'. you can invent reasons for this name but it has nice properties. it's short, easy to type. tools: goc, gol, goa. if there's an interactive debugger/interpreter it could just be called 'go'. the suffix is .go.

Robert Empty interfaces: interface {}. These are implemented by all interfaces, and thus this could take the place of void*.

We didn't figure it all out right away. For instance, it took us over a year to figure out arrays and slices. But a significant amount of the flavor of the language emerged in that first couple of days.

Notice that Robert said C was the starting point, not C++. I'm not certain but I believe he meant C proper, especially because Ken was there. But it's also true that, in the end, we didn't really start from C. We built from scratch, borrowing only minor things like operators and brace brackets and a few common keywords. (And of course we also borrowed ideas from other languages we knew.) In any case, I see now that we reacted to C++ by going back down to basics, breaking it all down and starting over. We weren't trying to design a better C++, or even a better C. It was to be a better language overall for the kind of software we cared about.

In the end of course it came out quite different from either C or C++. More different even than many realize. I made a list of significant simplifications in Go over C and C++:

- regular syntax (don't need a symbol table to parse)

- garbage collection (only)

- no header files

- explicit dependencies

- no circular dependencies

- constants are just numbers

- int and int32 are distinct types

- letter case sets visibility

- methods for any type (no classes)

- no subtype inheritance (no subclasses)

- package-level initialization and well-defined order of initialization

- files compiled together in a package

- package-level globals presented in any order

- no arithmetic conversions (constants help)

- interfaces are implicit (no "implements" declaration)

- embedding (no promotion to superclass)

- methods are declared as functions (no special location)

- methods are just functions

- interfaces are just methods (no data)

- methods match by name only (not by type)

- no constructors or destructors

- postincrement and postdecrement are statements, not expressions

- no preincrement or predecrement

- assignment is not an expression

- evaluation order defined in assignment, function call (no "sequence point")

- no pointer arithmetic

- memory is always zeroed

- legal to take address of local variable

- no "this" in methods

- segmented stacks

- no const or other type annotations

- no templates

- no exceptions

- builtin string, slice, map

- array bounds checking

And yet, with that long list of simplifications and missing pieces, Go is, I believe, more expressive than C or C++. Less can be more.

But you can't take out everything. You need building blocks such as an idea about how types behave, and syntax that works well in practice, and some ineffable thing that makes libraries interoperate well.

We also added some things that were not in C or C++, like slices and maps, composite literals, expressions at the top level of the file (which is a huge thing that mostly goes unremarked), reflection, garbage collection, and so on. Concurrency, too, naturally.

One thing that is conspicuously absent is of course a type hierarchy. Allow me to be rude about that for a minute.

Early in the rollout of Go I was told by someone that he could not imagine working in a language without generic types. As I have reported elsewhere, I found that an odd remark.

To be fair he was probably saying in his own way that he really liked what the STL does for him in C++. For the purpose of argument, though, let's take his claim at face value.

What it says is that he finds writing containers like lists of ints and maps of strings an unbearable burden. I find that an odd claim. I spend very little of my programming time struggling with those issues, even in languages without generic types.

But more important, what it says is that types are the way to lift that burden. Types. Not polymorphic functions or language primitives or helpers of other kinds, but types.

That's the detail that sticks with me.

Programmers who come to Go from C++ and Java miss the idea of programming with types, particularly inheritance and subclassing and all that. Perhaps I'm a philistine about types but I've never found that model particularly expressive.

My late friend Alain Fournier once told me that he considered the lowest form of academic work to be taxonomy. And you know what? Type hierarchies are just taxonomy. You need to decide what piece goes in what box, every type's parent, whether A inherits from B or B from A. Is a sortable array an array that sorts or a sorter represented by an array? If you believe that types address all design issues you must make that decision.

I believe that's a preposterous way to think about programming. What matters isn't the ancestor relations between things but what they can do for you.

That, of course, is where interfaces come into Go. But they're part of a bigger picture, the true Go philosophy.

If C++ and Java are about type hierarchies and the taxonomy of types, Go is about composition.

Doug McIlroy, the eventual inventor of Unix pipes, wrote in 1964 (!):

We should have some ways of coupling programs like garden hose--screw in another segment when it becomes necessary to massage data in another way. This is the way of IO also.That is the way of Go also. Go takes that idea and pushes it very far. It is a language of composition and coupling.

The obvious example is the way interfaces give us the composition of components. It doesn't matter what that thing is, if it implements method M I can just drop it in here.

Another important example is how concurrency gives us the composition of independently executing computations.

And there's even an unusual (and very simple) form of type composition: embedding.

These compositional techniques are what give Go its flavor, which is profoundly different from the flavor of C++ or Java programs.

===========

There's an unrelated aspect of Go's design I'd like to touch upon: Go was designed to help write big programs, written and maintained by big teams.

There's this idea about "programming in the large" and somehow C++ and Java own that domain. I believe that's just a historical accident, or perhaps an industrial accident. But the widely held belief is that it has something to do with object-oriented design.

I don't buy that at all. Big software needs methodology to be sure, but not nearly as much as it needs strong dependency management and clean interface abstraction and superb documentation tools, none of which is served well by C++ (although Java does noticeably better).

We don't know yet, because not enough software has been written in Go, but I'm confident Go will turn out to be a superb language for programming in the large. Time will tell.

===========

Now, to come back to the surprising question that opened my talk:

Why does Go, a language designed from the ground up for what what C++ is used for, not attract more C++ programmers?

Jokes aside, I think it's because Go and C++ are profoundly different philosophically.

C++ is about having it all there at your fingertips. I found this quote on a C++11 FAQ:

The range of abstractions that C++ can express elegantly, flexibly, and at zero costs compared to hand-crafted specialized code has greatly increased.That way of thinking just isn't the way Go operates. Zero cost isn't a goal, at least not zero CPU cost. Go's claim is that minimizing programmer effort is a more important consideration.

Go isn't all-encompassing. You don't get everything built in. You don't have precise control of every nuance of execution. For instance, you don't have RAII. Instead you get a garbage collector. You don't even get a memory-freeing function.

What you're given is a set of powerful but easy to understand, easy to use building blocks from which you can assemble—compose—a solution to your problem. It might not end up quite as fast or as sophisticated or as ideologically motivated as the solution you'd write in some of those other languages, but it'll almost certainly be easier to write, easier to read, easier to understand, easier to maintain, and maybe safer.

To put it another way, oversimplifying of course:

Python and Ruby programmers come to Go because they don't have to surrender much expressiveness, but gain performance and get to play with concurrency.

C++ programmers don't come to Go because they have fought hard to gain exquisite control of their programming domain, and don't want to surrender any of it. To them, software isn't just about getting the job done, it's about doing it a certain way.

The issue, then, is that Go's success would contradict their world view.

And we should have realized that from the beginning. People who are excited about C++11's new features are not going to care about a language that has so much less. Even if, in the end, it offers so much more.

Thank you.

June 24, 2012

June 21, 2012

research!rsc

A Tour of Go

Last week, I gave a talk about Go at the Boston Google Developers Group meeting. There were some problems with the recording, so I have rerecorded the talk as a screencast and posted it on YouTube.

Here are the answers to questions asked at the end of the talk.

Q. How does Go work with debuggers?

To start, both Go toolchains include debugging information that gdb can read in the final binaries, so basic gdb functionality works on Go programs just as it does on C programs.

We’ve talked for a while about a custom Go debugger, but there isn’t one yet.

Many of the programs we want to debug are live, running programs. The net/http/pprof package provides debugging information like goroutine stacks, memory profiling, and cpu profiling in response to special HTTP requests.

Q. If a goroutine is stuck reading from a channel with no other references, does the goroutine get garbage collected?

No. From the garbage collection point of view, both sides of the channel are represented by the same pointer, so it can’t distinguish the receive and send sides. Even if we could detect this situation, we’ve found that it’s very useful to keep these goroutines around, because the program is probably heading for a deadlock. When a Go program deadlocks, it prints all its goroutine stacks and then exits. If we garbage collected the goroutines as they got stuck, the deadlock handler wouldn’t have anything useful to print except "your entire program has been garbage collected".

Q. Can a C++ program call into Go?

We wrote a tool called cgo so that Go programs can call into C, and we’ve implemented support for Go in SWIG, so that Go programs can call into C++. In those programs, the C or C++ can in turn call back into Go. But we don’t have support for a C or C++ program—one that starts execution in the C or C++ world instead of the Go world—to call into Go.

The hardest part of the cross-language calls is converting between the C calling convention and the Go calling convention, specifically with the regard to the implementation of segmented stacks. But that’s been done and works.

Making the assumption that these mixed-language binaries start in Go has simplified a number of parts of the implementation. I don’t anticipate any technical surprises involved in removing these assumptions. It’s just work.

Q. What are the areas that you specifically are trying to improve the language?

For the most part, I’m not trying to improve the language itself. Part of the effort in preparing Go 1 was to identify what we wanted to improve and do it. Many of the big changes were based on two or three years of experience writing Go programs, and they were changes we’d been putting off because we knew that they’d be disruptive. But now that Go 1 is out, we want to stop changing things and spend another few years using the language as it exists today. At this point we don’t have enough experience with Go 1 to know what really needs improvement.

My Go work is a small amount of fixing bugs in the libraries or in the compiler and a little bit more work trying to improve the performance of what’s already there.

Q. What about talking to databases and web services?

For databases, one of the packages we added in Go 1 is a standard database/sql package. That package defines a standard API for interacting with SQL databases, and then people can implement drivers that connect the API to specific database implementations like SQLite or MySQL or Postgres.

For web services, you’ve seen the support for JSON and XML encodings. Those are typically good enough for ad hoc REST services. I recently wrote a package for connecting to the SmugMug photo hosting API, and there’s one generic call that unmarshals the response into a struct of the appropriate type, using json.Unmarshal. I expect that XML-based web services like SOAP could be framed this way too, but I’m not aware of anyone who’s done that.

Inside Google, of course, we have plenty of services, but they’re based on protocol buffers, so of course there’s a good protocol buffer library for Go.

Q. What about generics? How far off are they?

People have asked us about generics from day 1. The answer has always been, and still is, that it’s something we’ve put a lot of thought into, but we haven’t yet found an approach that we think is a good fit for Go. We’ve talked to people who have been involved in the design of generics in other languages, and they’ve almost universally cautioned us not to rush into something unless we understand it very well and are comfortable with the implications. We don’t want to do something that we’ll be stuck with forever and regret.

Also, speaking for myself, I don’t miss generics when I write Go programs. What’s there, having built-in support for arrays, slices, and maps, seems to work very well.

Finally, we just made this promise about backwards compatibility with the release of Go 1. If we did add some form of generics, my guess is that some of the existing APIs would need to change, which can’t happen until Go 2, which I think is probably years away.

Q. What types of projects does Google use Go for?

Most of the things we use Go for I can’t talk about. One notable exception is that Go is an App Engine language, which we announced at I/O last year. Another is vtocc, a MySQL load balancer used to manage database lookups in YouTube’s core infrastructure.

Q. How does the Plan 9 toolchain differ from other compilers?

It’s a completely incompatible toolchain in every way. The main difference is that object files don’t contain machine code in the sense of having the actual instruction bytes that will be used in the final binary. Instead they contain a custom encoding of the assembly listings, and the linker is in charge of turning those into actual machine instructions. This means that the assembler, C compiler, and Go compiler don’t all duplicate this logic. The main change for Go is the support for segmented stacks.

I should add that we love the fact that we have two completely different compilers, because it keeps us honest about really implementing the spec.

Q. What are segmented stacks?

One of the problems in threaded C programs is deciding how big a stack each thread should have. If the stack is too small, then the thread might run out of stack and cause a crash or silent memory corruption, and if the stack is too big, then you’re wasting memory. In Go, each goroutine starts with a small stack, typically 4 kB, and then each function checks if it is about to run out of stack and if so allocates a new stack segment that gets recycled once it’s not needed anymore.

Gccgo supports segmented stacks, but it requires support added recently to the new GNU linker, gold, and that support is only implemented for x86-32 and x86-64.

Segmented stacks are something that lots of people have done before in experimental or research systems, but they have never made it into the C toolchains.

Q. What is the overhead of segmented stacks?

It’s a few instructions per function call. It’s been a long time since I tried to measure the precise overhead, but in most programs I expect it to be not more than 1-2%. There are definitely things we could do to try to reduce that, but it hasn’t been a concern.

Q. Do goroutine stacks adapt in size?

The initial stack allocated for a goroutine does not adapt. It’s always 4k right now. It has been other values in the past but always a constant. One of the things I’d like to do is to look at what the goroutine will be running and adjust the stack accordingly, but I haven’t.

Q. Are there any short-term plans for dynamic loading of modules?

No. I don’t think there are any technical surprises, but assuming that everything is statically linked simplified some of the implementation. Like with calling Go from C++ programs, I believe it’s just work.

Gccgo might be closer to support for this, but I don’t believe that it supports dynamic loading right now either.

Q. How much does the language spec say about reflection?

The spec is intentionally vague about reflection, but package reflect’s API is definitely part of the Go 1 definition. Any conforming implementation would need to implement that API. In fact, gc and gccgo do have different implementations of that package reflect API, but then the packages that use reflect like fmt and json can be shared.

Q. Do you have a release schedule?

We don’t have any fixed release schedule. We’re not keeping things secret, but we’re also not making commitments to specific timelines.

Go 1 was in progress publicly for months, and if you watched you could see the bug count go down and the release candidates announced, and so on.

Right now we’re trying to slow down. We want people to write things using Go, which means we need to make it a stable foundation to build on. Go 1.0.1, the first bug release, was released four weeks after Go 1, and Go 1.0.2 was seven weeks after Go 1.0.1.

Q. Where do you see Go in five years? What languages will it replace?

I hope that it will still be at golang.org, that the Go project will still be thriving and relevant. We built it to write the kinds of programs we’ve been writing in C++, Java, and Python, but we’re not trying to go head-to-head with those languages. Each of those has definite strengths that make them the right choice for certain situations. We think that there are plenty of situations, though, where Go is a better choice.

If Go doesn’t work out, and for some reason in five years we’re programming in something else, I hope the something else would have the features I talked about, specifically the Go way of doing interfaces and the Go way of handling concurrency.

If Go fails but some other language with those two features has taken over the programming landscape, if we can move the computing world to a language with those two features, then I’d be sad about Go but happy to have gotten to that situation.

Q. What are the limits to scalability with building a system with many goroutines?

The primary limit is the memory for the goroutines. Each goroutine starts with a 4kB stack and a little more per-goroutine data, so the overhead is between 4kB and 5kB. That means on this laptop I can easily run 100,000 goroutines, in 500 MB of memory, but a million goroutines is probably too much.

For a lot of simple goroutines, the 4 kB stack is probably more than necessary. If we worked on getting that down we might be able to handle even more goroutines. But remember that this is in contrast to C threads, where 64 kB is a tiny stack and 1-4MB is more common.

Q. How would you build a traditional barrier using channels?

It’s important to note that channels don’t attempt to be a concurrency Swiss army knife. Sometimes you do need other concepts, and the standard sync package has some helpers. I’d probably use a sync.WaitGroup.

If I had to use channels, I would do it like in the web crawler example, with a channel that all the goroutines write to, and a coordinator that knows how many responses it expects.

Q. What is an example of the kind of application you’re working on performance for? How will you beat C++?

I haven’t been focusing on specific applications. Go is still young enough that if you run some microbenchmarks you can usually find something to optimize. For example, I just sped up floating point computation by about 25% a few weeks ago. I’m also working on more sophisticated analyses for things like escape analysis and bounds check elimination, which address problems that are unique to Go, or at least not problems that C++ faces.

Our goal is definitely not to beat C++ on performance. The goal for Go is to be near C++ in terms of performance but at the same time be a much more productive environment and language, so that you’d rather program in Go.

Q. What are the security features of Go?

Go is a type-safe and memory-safe language. There are no dangling pointers, no pointer arithmetic, no use-after-free errors, and so on.

You can break the rules by importing package unsafe, which gives you a special type unsafe.Pointer. You can convert any pointer or integer to an unsafe.Pointer and back. That’s the escape hatch, which you need sometimes, like for extracting the bits of a float64 as a uint64. But putting it in its own package means that unsafe code is explicitly marked as unsafe. If your program breaks in a strange way, you know where to look.

Isolating this power also means that you can restrict it. On App Engine you can’t import package unsafe in the code you upload for your app.

I should point out that the current Go implementation does have data races, but they are not fundamental to the language. It would be possible to eliminate the races at some cost in efficiency, and for now we’ve decided not to do that. There are also tools such as Thread Sanitizer that help find these kinds of data races in Go programs.

Q. What language do you think Go is trying to displace?

I don’t think of Go that way. We were writing C++ code before we did Go, so we definitely wanted not to write C++ code anymore. But we’re not trying to displace all C++ code, or all Python code, or all Java code, except maybe in our own day-to-day work.

One of the surprises for me has been the variety of languages that new Go programmers used to use. When we launched, we were trying to explain Go to C++ programmers, but many of the programmers Go has attracted have come from more dynamic languages like Python or Ruby.

Q. How does Go make it possible to use multiple cores?

Go lets you tell the runtime how many operating system threads to use for executing goroutines, and then it muxes the goroutines onto those threads. So if you’ve written a program that has four or more goroutines executing simultaneously, you can tell the runtime to use four OS threads and then you’re running on four cores.

We’ve been pleasantly surprised by how easy people find it to write these kinds of programs. People who have not written parallel or concurrent programs before write concurrent Go programs using channels that can take advantage of multiple cores, and they enjoy the experience. That’s more than you can usually say for C threads. Joe Armstrong, one of the creators of Erlang, makes the point that thinking about concurrency in terms of communication might be more natural for people, since communication is something we’ve done for a long time. I agree.

Q. How does the muxing of goroutines work?

It’s not very smart. It’s the simplest thing that isn’t completely stupid: all the scheduling operations are O(1), and so on, but there’s a shared run queue that the various threads pull from. There’s no affinity between goroutines and threads, there’s no attempt to make sophisticated scheduling decisions, and there’s not even preemption.

The goroutine scheduler was the first thing I wrote when I started working on Go, even before I was working full time on it, so it’s just about four years old. It has served us surprisingly well, but we’ll probably want to replace it in the next year or so. We’ve been having some discussions recently about what we’d want to try in a new scheduler.

Q. Is there any plan to bootstrap Go in Go, to write the Go compiler in Go?

There’s no immediate plan. Go does ship with a Go program parser written in Go, so the first piece is already done, and there’s an experimental type checker in the works, but those are mainly for writing program analysis tools. I think that Go would be a great language to write a compiler in, but there’s no immediate plan. The current compiler, written in C, works well.

I’ve worked on bootstrapped languages in the past, and I found that bootstrapping is not necessarily a good fit for languages that are changing frequently. It reminded me of climbing a cliff and screwing hooks into the cliff once in a while to catch you if you fall. Once or twice I got into situations where I had identified a bug in the compiler, but then trying to write the code to fix the bug tickled the bug, so it couldn’t be compiled. And then you have to think hard about how to write the fix in a way that avoids the bug, or else go back through your version control history to find a way to replay history without introducing the bug. It’s not fun.

The fact that Go wasn’t written in itself also made it much easier to make significant language changes. Before the initial release we went through a handful of wholesale syntax upheavals, and I’m glad we didn’t have to worry about how we were going to rebootstrap the compiler or ensure some kind of backwards compatibility during those changes.

Finally, I hope you’ve read Ken Thompson’s Turing Award lecture, Reflections on Trusting Trust. When we were planning the initial open source release, we liked to joke that no one in their right mind would accept a bootstrapped compiler binary written by Ken.

Q. What does Go do to compile efficiently at scale?

This is something that we talked about a lot in early talks about Go. The main thing is that it cuts off transitive dependencies when compiling a single module. In most languages, if package A imports B, and package B imports C, then the compilation of A reads not just the compiled form of B but also the compiled form of C. In large systems, this gets out of hand quickly. For example, in C++ on my Mac, including <iostream> reads 25,326 lines from 131 files. (C and C++ headers aren't “compiled form,” but the problem is the same.) Go promises that each import reads a single compiled package file. If you need to know something about other packages to understand that package’s API, then the compiled file includes the extra information you need, but only that.

Of course, if you are building from scratch and package A imports B which imports C, then of course C has to be compiled first, and then B, and then A. The import point is that when you go to compile A, you don’t reload C’s object file. In a real program, the dependencies are usually not a chain like this. We might have A1, A2, A3, and so on all importing B. It’s a significant win if none of them need to reread C.

Q. How do you identify a good project for Go?

I think a good project for Go is one that you’re excited about writing in Go. Go really is a general purpose programming language, and except for the compiler work, it’s the only language I’ve written significant programs in for the past four years.

Most of the people I know who are using Go are using it for networked servers, where the concurrency features have something contribute, but it’s great for other contexts too. I’ve used it to write a simple mail reader, file system implementations to read old disks, and a variety of other unnetworked programs.

Q. What is the current and future IDE support for Go?

I’m not an IDE user in the modern sense, so really I don’t know. We think that it would be possible to write a really nice IDE specifically for Go, but it’s not something we’ve had time to explore. The Go distribution has a misc directory that contains basic Go support for common editors, and there is a Goclipse project to write an Eclipse-based IDE, but I don’t know much about those.

The development environment I use, acme, is great for writing Go code, but not because of any custom Go support.

If you have more questions, please consult these resources.

May 25, 2012

newsham

The great latency race

You can make more money on wall street if you can get your orders into the queue first.

That statement is a gross oversimplification, but there is truth to it. Enough truth to cause wall street to hire lots of really really smart people to make their systems run ever so slightly faster. Enough truth to cause wall street to move computers from one location to another location just so that they don't have to wait for electronic signals to travel the longer distance. This is a pretty amazing thing! We've incentivized wall street to waste huge amounts of resources on something that shouldn't really matter. The market is a very important (and very impressive!) arbiter of our economic system and it provides great benefits to our society. The "latency race" to get orders into the order books is not one of them. Nor are the incentives that created the "latency race" a necessary part of the system.

One way we could eliminate the incentives that led to this madness is with a lottery. First pick a time quantum, say 1ms. Next change the order processing to batch together all orders received in the same time quantum that have matching prices into a pool. At the end of that quantum process the orders in a random order. In effect create a lottery for all orders placed "at the same time." Order processing proceeds as before; the only difference is that orders at the same price are processed in lottery order instead of first-come-first-serve order. Suddenly there is no need to make automated trading software work any faster than the time quantum. The pointless "latency race" is put to rest.

May 20, 2012

Stanley Lieber

(Untitled)

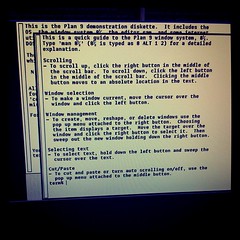

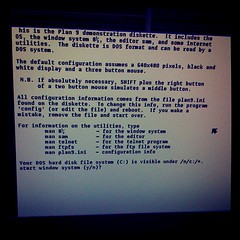

stanleylieber posted a photo:

(Untitled)

stanleylieber posted a photo:

(Untitled)

stanleylieber posted a photo:

(Untitled)

stanleylieber posted a photo:

(Untitled)

stanleylieber posted a photo:

turned out to be a photocopy.

stanleylieber posted a photo:

(Untitled)

stanleylieber posted a photo:

(Untitled)

stanleylieber posted a photo:

May 15, 2012

May 10, 2012

Software Magpie

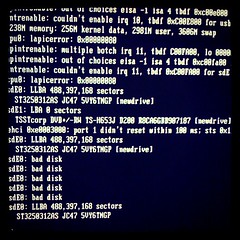

Terminal madness

Tonight I was updating an Ubuntu server to 12.04LTS using

do-release-upgrade.

It failed, because of a problem with my locale. Normally I use LANG=C to avoid some unpleasantness for a programming environment, but it was set to en_GB.UTF-8, which confused the libc update, aborting the upgrade and leaving the system in a highly inconsistent state. Wonderful.

A quick Google found a way round it:

apt-get -o APT::Immediate-Configure=0 -f install

which was fine (no, I've no idea either), and I'd set LANG=C just in case, but because I was using 9term, the apt-get initially failed with:

Unknown terminal: 9term

Check the TERM environment variable.

Also make sure that the terminal is defined in the terminfo database.

Alternatively, set the TERMCAP environment variable to the desired termcap entry.

debconf: whiptail output the above errors, giving up!

May 08, 2012

Stanley Lieber

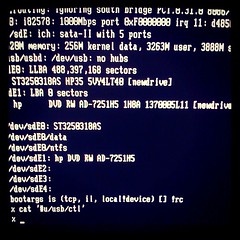

hp 8100c, *msi=1, *nousbehci=1, *nousbuhci=1

stanleylieber posted a photo:

April 20, 2012

April 17, 2012

Stanley Lieber

(Untitled)

stanleylieber posted a photo:

(Untitled)

stanleylieber posted a photo:

(Untitled)

stanleylieber posted a photo:

(Untitled)

stanleylieber posted a photo:

(Untitled)

stanleylieber posted a photo:

April 16, 2012

April 12, 2012

research!rsc

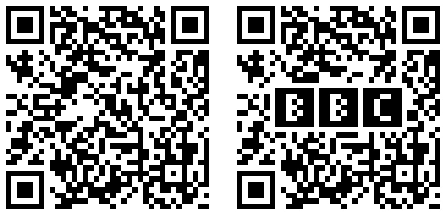

QArt Codes

QR codes are 2-dimensional bar codes that encode arbitrary text strings. A common use of QR codes is to encode URLs so that people can scan a QR code (for example, on an advertising poster, building roof, volleyball bikini, belt buckle, or airplane banner) to load a web site on a cell phone instead of having to “type” in a URL.

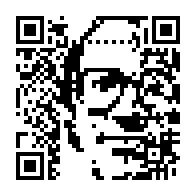

QR codes are encoded using Reed-Solomon error-correcting codes, so that a QR scanner does not have to see every pixel correctly in order to decode the content. The error correction makes it possible to introduce a few errors (fewer than the maximum that the algorithm can fix) in order to make an image. For example, in 2008, Duncan Robertson took a QR code for “http://bbc.co.uk/programmes” (left) and introduced errors in the form of a BBC logo (right):

That's a neat trick and a pretty logo, but it's uninteresting from a technical standpoint. Although the BBC logo pixels look like QR code pixels, they are not contribuing to the QR code. The QR reader can't tell much difference between the BBC logo and the Union Jack. There's just a bunch of noise in the middle either way.

Since the BBC QR logo appeared, there have been many imitators. Most just slap an obviously out-of-place logo in the middle of the code. This Disney poster is notable for being more in the spirit of the BBC code.

There's a different way to put pictures in QR codes. Instead of scribbling on redundant pieces and relying on error correction to preserve the meaning, we can engineer the encoded values to create the picture in a code with no inherent errors, like these:

This post explains the math behind making codes like these, which I call QArt codes. I have published the Go programs that generated these codes at code.google.com/p/rsc and created a web site for creating these codes.

Background

For error correction, QR uses Reed-Solomon coding (like nearly everything else). For our purposes, Reed-Solomon coding has two important properties. First, it is what coding theorists call a systematic code: you can see the original message in the encoding. That is, the Reed-Solomon encoding of “hello” is “hello” followed by some error-correction bytes. Second, Reed-Solomon encoded messages can be XOR'ed: if we have two different Reed-Solomon encoded blocks b1 and b2 corresponding to messages m1 and m2, b1 ⊕ b2 is also a Reed-Solomon encoded block; it corresponds to the message m1 ⊕ m2. (Here, ⊕ means XOR.) If you are curious about why these two properties are true, see my earlier post, Finite Field Arithmetic and Reed-Solomon Coding.

QR Codes

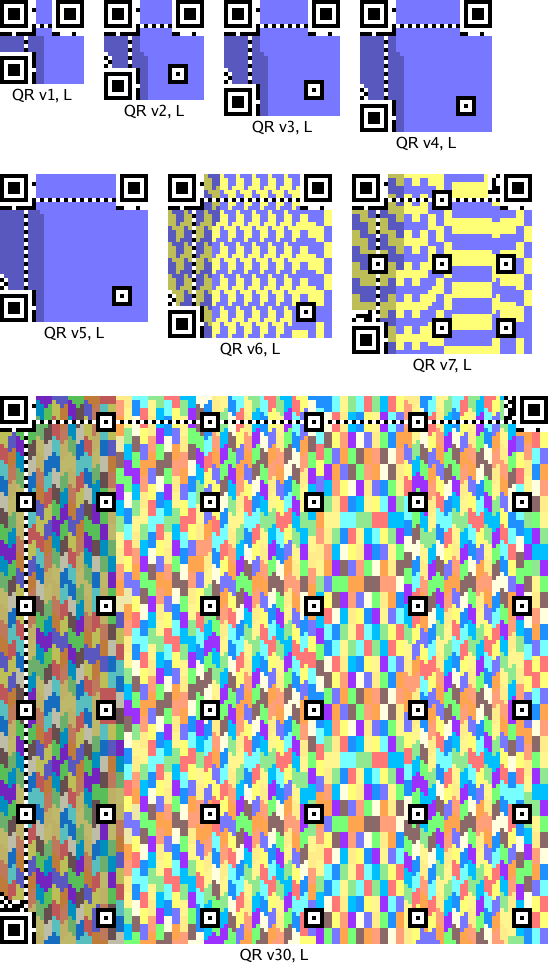

A QR code has a distinctive frame that help both people and computers recognize them as QR codes. The details of the frame depend on the exact size of the code—bigger codes have room for more bits—but you know one when you see it: the outlined squares are the giveaway. Here are QR frames for a sampling of sizes:

The colored pixels are where the Reed-Solomon-encoded data bits go. Each code may have one or more Reed-Solomon blocks, depending on its size and the error correction level. The pictures show the bits from each block in a different color. The L encoding is the lowest amount of redundancy, about 20%. The other three encodings increase the redundancy, using 38%, 55%, and 65%.

(By the way, you can read the redundancy level from the top pixels in the two leftmost columns. If black=0 and white=1, then you can see that 00 is L, 01 is M, 10 is Q, and 11 is H. Thus, you can tell that the QR code on the T-shirt in this picture is encoded at the highest redundancy level, while this shirt uses the lowest level and therefore might take longer or be harder to scan.

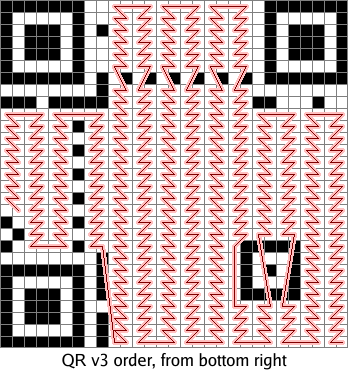

As I mentioned above, the original message bits are included directly in the message's Reed-Solomon encoding. Thus, each bit in the original message corresponds to a pixel in the QR code. Those are the lighter pixels in the pictures above. The darker pixels are the error correction bits. The encoded bits are laid down in a vertical boustrophedon pattern in which each line is two columns wide, starting at the bottom right corner and ending on the left side:

We can easily work out where each message bit ends up in the QR code. By changing those bits of the message, we can change those pixels and draw a picture. There are, however, a few complications that make things interesting.

QR Masks

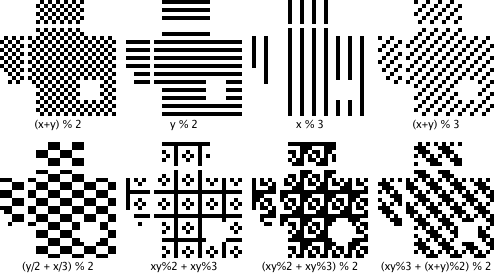

The first complication is that the encoded data is XOR'ed with an obfuscating mask to create the final code. There are eight masks:

An encoder is supposed to choose the mask that best hides any patterns in the data, to keep those patterns from being mistaken for framing boxes. In our encoder, however, we can choose a mask before choosing the data. This violates the spirit of the spec but still produces legitimate codes.

QR Data Encoding

The second complication is that we want the QR code's message to be intelligible. We could draw arbitrary pictures using arbitrary 8-bit data, but when scanned the codes would produce binary garbage. We need to limit ourselves to data that produces sensible messages. Luckily for us, QR codes allow messages to be written using a few different alphabets. One alphabet is 8-bit data, which would require binary garbage to draw a picture. Another is numeric data, in which every run of 10 bits defines 3 decimal digits. That limits our choice of pixels slightly: we must not generate a 10-bit run with a value above 999. That's not complete flexibility, but it's close: 9.96 bits of freedom out of 10. If, after encoding an image, we find that we've generated an invalid number, we pick one of the 5 most significant bits at random—all of them must be 1s to make an invalid number—hard wire that bit to zero, and start over.

Having only decimal messages would still not be very interesting: the message would be a very large number. Luckily for us (again), QR codes allow a single message to be composed from pieces using different encodings. The codes I have generated consist of an 8-bit-encoded URL ending in a # followed by a numeric-encoded number that draws the actual picture:

The leading URL is the first data encoded; it takes up the right side of the QR code. The error correction bits take up the left side.

When the phone scans the QR code, it sees a URL; loading it in a browser visits the base page and then looks for an internal anchor on the page with the given number. The browser won't find such an anchor, but it also won't complain.

The techniques so far let us draw codes like this one:

The second copy darkens the pixels that we have no control over: the error correction bits on the left and the URL prefix on the right. I appreciate the cyborg effect of Peter melting into the binary noise, but it would be nice to widen our canvas.

Gauss-Jordan Elimination

The third complication, then, is that we want to draw using more than just the slice of data pixels in the middle of the image. Luckily, we can.

I mentioned above that Reed-Solomon messages can be XOR'ed: if we have two different Reed-Solomon encoded blocks b1 and b2 corresponding to messages m1 and m2, b1 ⊕ b2 is also a Reed-Solomon encoded block; it corresponds to the message m1 ⊕ m2. (In the notation of the previous post, this happens because Reed-Solomon blocks correspond 1:1 with multiples of g(x). Since b1 and b2 are multiples of g(x), their sum is a multiple of g(x) too.) This property means that we can build up a valid Reed-Solomon block from other Reed-Solomon blocks. In particular, we can construct the sequence of blocks b0, b1, b2, ..., where bi is the block whose data bits are all zeros except for bit i and whose error correction bits are then set to correspond to a valid Reed-Solomon block. That set is a basis for the entire vector space of valid Reed-Solomon blocks. Here is the basis matrix for the space of blocks with 2 data bytes and 2 checksum bytes:

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

The missing entries are zeros. The gray columns highlight the pixels we have complete control over: there is only one row with a 1 for each of those pixels. Each time we want to change such a pixel, we can XOR our current data with its row to change that pixel, not change any of the other controlled pixels, and keep the error correction bits up to date.

So what, you say. We're still just twiddling data bits. The canvas is the same.

But wait, there's more! The basis we had above lets us change individual data pixels, but we can XOR rows together to create other basis matrices that trade data bits for error correction bits. No matter what, we're not going to increase our flexibility—the number of pixels we have direct control over cannot increase—but we can redistribute that flexibility throughout the image, at the same time smearing the uncooperative noise pixels evenly all over the canvas. This is the same procedure as Gauss-Jordan elimination, the way you turn a matrix into row-reduced echelon form.

This matrix shows the result of trying to assert control over alternating pixels (the gray columns):

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

| 1 | 1 | 1 | 1 | ||||||||||||||||||||||||||||

The matrix illustrates an important point about this trick: it's not completely general. The data bits are linearly independent, but there are dependencies between the error correction bits that mean we often can't have every pixel we ask for. In this example, the last four pixels we tried to get were unavailable: our manipulations of the rows to isolate the first four error correction bits zeroed out the last four that we wanted.

In practice, a good approach is to create a list of all the pixels in the Reed-Solomon block sorted by how useful it would be to be able to set that pixel. (Pixels from high-contrast regions of the image are less important than pixels from low-contrast regions.) Then, we can consider each pixel in turn, and if the basis matrix allows it, isolate that pixel. If not, no big deal, we move on to the next pixel.

Applying this insight, we can build wider but noisier pictures in our QR codes:

The pixels in Peter's forehead and on his right side have been sacrificed for the ability to draw the full width of the picture.

We can also choose the pixels we want to control at random, to make Peter peek out from behind a binary fog:

Rotations

One final trick. QR codes have no required orientation. The URL base pixels that we have no control over are on the right side in the canonical orientation, but we can rotate the QR code to move them to other edges.

Further Information

All the source code for this post, including the web server, is at code.google.com/p/rsc/source/browse/qr. If you liked this, you might also like Zip Files All The Way Down.

Acknowledgements

Alex Healy pointed out that valid Reed-Solomon encodings are closed under XOR, which is the key to spreading the picture into the error correction pixels. Peter Weinberger has been nothing but gracious about the overuse of his binary likeness. Thanks to both.

April 10, 2012

research!rsc

Finite Field Arithmetic and Reed-Solomon Coding

Finite fields are a branch of algebra formally defined in the 1820s, but interest in the topic can be traced back to public sixteenth-century polynomial-solving contests. For the next few centuries, finite fields had little practical value, but all changed in the last fifty years. It turns out that they are useful for many applications in modern computing, such as encryption, data compression, and error correction.

In particular, Reed-Solomon codes are an error-correcting code based on finite fields and used everywhere today. One early significant use was in the Voyager spacecraft: the messages it still sends back today, from the edge of the solar system, are heavily Reed-Solomon encoded so that even if only a small fragment makes it back to Earth, we can still reconstruct the message. Reed-Solomon coding is also used on CDs to withstand scratches, in wireless communications to withstand transmission problems, in QR codes to withstand scanning errors or smudges, in disks to withstand loss of fragments of the media, in high-level storage systems like Google's GFS and BigTable to withstand data loss and also to reduce read latency (the read can complete without waiting for all the responses to arrive).

This post shows how to implement finite field arithmetic efficiently on a computer, and then how to use that to implement Reed-Solomon encoding.

What is a Finite Field?

One way mathematicians study numbers is to abstract away the numbers themselves and focus on the operations. (This is kind of an object-oriented approach to math.) A field is defined as a set F and operators + and · on elements of F that satisfy the following properties:

- (Closure) For all x, y in F, x+y and x·y are in F.

- (Associative) For all x, y, z in F, (x+y)+z = x+(y+z) and (x·y)·z = x·(y·z).

- (Commutative) For all x, y in F, x+y = y+x and x·y = y·x.

- (Distributive) For all x, y, z in F, x·(y+z) = (x·y)+(x·z).

- (Identity) There is some element we'll call 0 in F such that for all x in F, x+0 = x. Similarly, there is some element we'll call 1 in F such that for all x in F, x·1 = x.

- (Inverse) For all x in F, there is some element y in F such that x+y = 0. We write y = −x. Similarly, for all x in F except 0, there is some element y in F such that x·y = 1. We write y = 1/x.

You probably recognize those properties from high school algebra class: the most well-known example of a field is the real numbers, where + is addition and · is multiplication. Other examples are complex numbers and fractions.

A mathematician doesn't have to prove the same results over and over

for the real numbers ℝ, the complex numbers ℂ, the fractions ℚ, and so on.

Instead, she can prove that a particular result holds for all fields—by assuming only

the above properties, called the field axioms. Then she can apply the result by

substituting a specific instance like the real numbers for the general idea of a field,

the same way that a programmer can implement just one vector(T)

and then instantiate it as vector(int), vector(string) and so on.

The integers ℤ are not a field: they lack multiplicative inverses. For example, there is no number that you can multiply by 2 to get 1, no 1/2. Surprisingly, though, the integers modulo any prime p do form a field. For example, the integers modulo 5 are 0, 1, 2, 3, 4. 1+4 = 0 (mod 5), so we say that 4 = −1. Similarly, 2·3 = 1 (mod 5), so we say that 3 = 1/2. After we've proved that ℤ/p is in fact a field, all the results about fields can be applied to ℤ/p. This is very useful: it lets us apply our intuition about the very familiar real numbers to these less familiar numbers. This field is written ℤ/p to emphasize that we're dealing with what's left after subtracting out all the p's. That is, we're dealing with what's left if you assume that p = 0. When you make that assumption, you get math that wraps around at p. These fields are called finite fields because, in contrast to fields like the real numbers, they have a finite number of elements.

For a programmer, the most interesting finite field is

ℤ/2, which contains just the integers 0, 1. Addition

is the same as XOR, and multiplication is the same as AND.

Note that ℤ/p is only a field when p is prime:

arithmetic on uint8 variables corresponds

to ℤ/256, but it is not a field: there is no 1/2.

What can you do with a field?

The only problem with fields is that there's not a ton you can do with just the field axioms. One thing you can do is build polynomials, which were the original motivation for the mathematicians who pioneered the use of fields in the early 1800s. If we introduce a symbolic variable x, then we can build polynomials whose coefficients are field values. We'll write F[x] to denote the polynomials over x using coefficients from F. For example, if we use the real numbers ℝ as our field, then the polynomials ℝ[x] include x2+1, x+2, and 3.14x2 − 2.72x + 1.41. Like integers, these polynomials can be added and multiplied, but not always divided—what is (x2+1)/(x+2)?—so they are not a field. However, remember how the integers are not a field but the integers modulo a prime are a field? The same happens here: polynomials are not a field but polynomials modulo some prime polynomial are.

What does “polynomials modulo some prime polynomial” mean anyway? A prime polynomial is one that cannot be factored, like x2+1 cannot be factored using real numbers. The field ℤ/5 is what you get by doing math under the assumption that 5 = 0; similarly, ℝ[x]/(x2+1) is what you get by doing math under the assumption that x2+1 = 0. Just as ℤ/5 math never deals with numbers as big as 5, ℝ[x]/(x2+1) math never deals with polynomials as big as x2: anything bigger can have some multiple of x2+1 subtracted out again. That is, the polynomials in ℝ[x]/(x2+1) are bounded in size: they have only x1 and x0 (constant) terms. To add polynomials, we just add the coefficients using the addition rule from the coefficient's field, independently, like a vector addition. To multiply polynomials, we have to do the multiplication and then subtract out any x2+1 we can. If we have (ax+b)·(cx+d), we can expand this to (a·c)x2 + (b·c+a·d)x + (b·d), and then subtract (a·c)(x2+1) = (a·c)x2 + (a·c), producing the final result: (b·c+a·d)x + (b·d−a·c). That might seem like a funny definition of multiplication, but it does in fact obey the field axioms. In fact, this particular field is more familiar than it looks: it is the complex numbers ℂ, but we've written x instead of the usual i. Assuming that x2+1 = 0 is, except for a renaming, the same as defining i2 = −1.

Doing all our math modulo a prime polynomial let us take the field of real numbers and produce a field whose elements are pairs of real numbers. We can apply the same trick to take a finite field like ℤ/p and product a field whose elements are fixed-length vectors of elements of ℤ/p. The original ℤ/p has p elements. If we construct (ℤ/p)[x]/f(x), where f(x) is a prime polynomial of degree n (f's maximum x exponent is n), the resulting field has pn elements: all the vectors made up of n elements from ℤ/p. Incredibly, the choice of prime polynomial doesn't matter very much: any two finite fields of size pn have identical structure, even if they give the individual elements different names. Because of this, it makes sense to refer to all the finite fields of size pn as one concept: GF(pn). The GF stands for Galois Field, in honor of Évariste Galois, who was the first to study these. The exact polynomial chosen to produce a particular GF(pn) is an implementation detail.